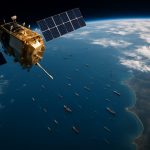

Satellite AIS Transforming Maritime Surveillance and Vessel Tracking

Space Spies of the Sea: Unveiling the Satellite AIS Revolution in Global Maritime MonitoringGlobal Maritime Tracking Market LandscapeEmerging Technologies in Satellite AIS and Maritime IntelligenceKey Players and Strategic Moves in…

Bosnia’s Digital Divide: Unexpected Expansion and Persistent Connectivity Challenges

Bosnia’s Internet Transformation: Unveiling Rapid Progress and Startling Connectivity DisparitiesCurrent State of Bosnia’s Internet MarketEmerging Technologies and Adoption PatternsKey Players and Market DynamicsProjected Expansion and User GrowthConnectivity Variations Across RegionsAnticipated…

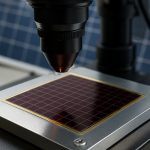

Perovskite Photovoltaic Technology Market 2025: Rapid Growth Driven by 30%+ Efficiency Gains & Commercialization Surge

Perovskite Photovoltaic Technology Market Report 2025: Unveiling Breakthrough Efficiency, Market Expansion, and Global Opportunities. Explore Key Trends, Forecasts, and Strategic Insights for the Next 3–5 Years. Executive Summary & Market…

Smart Metamaterials for Wireless Energy Transfer Market 2025: Surging Demand Drives 18% CAGR Through 2030

2025 Smart Metamaterials for Wireless Energy Transfer Market Report: In-Depth Analysis of Growth Drivers, Technology Innovations, and Global Opportunities Executive Summary & Market Overview Key Technology Trends in Smart Metamaterials…

The Surprising Ways Fashion, Energy, and Women Are Shaping Climate Solutions in 2025

Discover how fashion, renewable energy, and women's leadership converge to spark groundbreaking climate solutions in 2025.

Bitcoin Ready to Explode? Trump’s Fed Shakeup and Wall Street Chaos Could Send Crypto Skyrocketing

Bitcoin holds its breath as Trump teases a game-changing move at the Fed — investors eye the dollar, inflation, and a crypto breakout.

Wind Power Myths Busted: The Truth About Whales, Birds, and “Greenwashing” in 2025

Discover the real facts about wind energy's impact on wildlife, the environment, and the grid—debunking 2025's most viral myths.

India’s Renewable Power Surge: NLC and MAHAPREIT Join Forces for Massive Maharashtra Energy Expansion

NLC India Renewables and MAHAPREIT set sights on 5,000 MW energy boost in Maharashtra—here’s what this partnership means for India’s green future.

Stellar Lumens (XLM) Surges in 2025: Will It Finally Smash the $1 Barrier?

Stellar Lumens (XLM) accelerates its global payments revolution in 2025, with price predictions and network upgrades fueling fresh investor optimism.

Solid-State Battery Precursor Market Set to Skyrocket: Experts Forecast 730% Growth by 2031

Inside the Solid-State Battery Revolution: Why 2025–2031 Will Be a Game-Changer for Global Energy Storage The global solid-state battery precursor market is primed for explosive growth, with a 35.9% CAGR…